Chapter 10 Extended Example: Reasoning About Goodness of Fit

This chapter is a bit different: we introduce the idea of “goodness of fit” through implementing the analysis in a blog post discussing a disputed paper in a Psychology journal. You can find the article here: http://datacolada.org/74

library(tidyverse)10.1 Go and read the blog post

To start, go read this blog post: http://datacolada.org/74. This should take you at least an hour or so to do in detail, if not longer.

Exercise: summarize this study in your own words. Two or three sentences should suffice. What did they do, how did they do it, and what were they trying to find out? It is important that you understand this before you move on.

10.2 Distribution of last digits

The distribution of digits in numeric data is of considerable interest in certain fields. In forensic accounting, where investigators try to identify fraudulent accounting practice by identifying systematic anomalies in financial records, the relative frequency with which different digits occur can indicate potential fraud if it differs from what you would expect. It is well-established that the last digit, in particular, of numeric data should be distributed pretty evenly between the numbers \(0 - 9\) in any given set of data.

Remark: this isn’t trivial; for example, Benford’s Law says that this is not true about the first digit in numbers in certain types of financial data.

So, let’s look at the last digit of the measurements from the hand sanitizer study.

The data is available from http://datacolada.org/appendix/74/ and their R code can be found here. Here we

use our own R code but I borrow parts from theirs.

10.2.1 Read in the data

First, read in the data as usual:

study1 <- readr::read_csv(

file = "http://datacolada.org/appendix/74/Study%201%20-%20Decoy%20Effect.csv",

col_names = TRUE,

col_types = stringr::str_c(rep("n",42),collapse = "")

)

glimpse(study1)Rows: 40

Columns: 42

$ Subject <dbl> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, …

$ `Group (1=experimental condition, 0 = control condition)` <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0

$ Day1 <dbl> 55, 60, 63, 55, 60, 60, 35, 55, 45, 75, 50, 70, 60, 40, 35, 50, 50, 60, 55, 50, 50, 25, 45, 60, 70, 10, 60, 80, 55, 70, 45, 55, 25, 55, 45,…

$ Day2 <dbl> 45, 55, 40, 50, 40, 20, 40, 40, 50, 55, 45, 55, 45, 45, 50, 55, 45, 50, 55, 60, 60, 35, 45, 65, 15, 25, 50, 60, 50, 45, 50, 50, 45, 35, 60,…

$ Day3 <dbl> 45, 55, 50, 40, 15, 90, 85, 20, 90, 35, 55, 50, 30, 35, 60, 65, 45, 60, 60, 60, 55, 50, 60, 60, 40, 60, 60, 25, 40, 55, 25, 55, 30, 50, 40,…

$ Day4 <dbl> 40, 55, 60, 40, 40, 60, 45, 35, 90, 45, 55, 55, 35, 35, 60, 60, 35, 45, 45, 57, 55, 45, 53, 70, 40, 37, 60, 85, 25, 37, 30, 45, 30, 50, 43,…

$ Day5 <dbl> 45, 50, 25, 45, 75, 65, 55, 30, 55, 60, 53, 50, 50, 40, 65, 63, 47, 40, 55, 60, 53, 40, 47, 63, 47, 40, 64, 95, 43, 33, 30, 53, 25, 43, 43,…

$ Day6 <dbl> 45, 45, 35, 60, 65, 60, 45, 50, 45, 40, 55, 50, 43, 50, 55, 65, 50, 43, 53, 63, 55, 50, 45, 43, 65, 57, 70, 75, 53, 47, 33, 55, 47, 43, 55,…

$ Day7 <dbl> 55, 45, 55, 85, 60, 40, 40, 45, 45, 45, 50, 40, 40, 45, 55, 60, 37, 47, 55, 60, 75, 43, 47, 63, 75, 70, 73, 65, 50, 40, 35, 55, 45, 37, 50,…

$ Day8 <dbl> 60, 40, 40, 55, 55, 37, 37, 60, 90, 50, 55, 45, 40, 30, 63, 60, 35, 35, 50, 60, 40, 34, 30, 60, 78, 60, 70, 65, 47, 30, 33, 45, 37, 32, 47,…

$ Day9 <dbl> 45, 40, 33, 65, 60, 33, 40, 40, 100, 40, 45, 43, 37, 30, 60, 63, 30, 43, 55, 60, 60, 40, 25, 60, 60, 65, 95, 87, 65, 45, 37, 50, 43, 33, 50…

$ Day10 <dbl> 67, 40, 50, 80, 50, 60, 45, 50, 93, 36, 40, 40, 50, 45, 60, 65, 50, 50, 53, 60, 65, 45, 50, 60, 70, 78, 83, 83, 85, 55, 49, 53, 45, 40, 55,…

$ Day11 <dbl> 65, 56, 40, 65, 60, 80, 65, 75, 87, 50, 50, 43, 53, 50, 65, 65, 47, 55, 47, 55, 70, 40, 37, 36, 75, 78, 75, 65, 80, 55, 50, 55, 55, 40, 55,…

$ Day12 <dbl> 70, 40, 50, 65, 60, 60, 45, 55, 80, 60, 55, 43, 45, 55, 65, 60, 55, 55, 50, 55, 70, 40, 55, 55, 75, 70, 73, 53, 88, 58, 45, 50, 40, 55, 40,…

$ Day13 <dbl> 80, 40, 25, 70, 60, 60, 55, 75, 89, 65, 45, 40, 37, 65, 65, 70, 40, 45, 55, 47, 65, 45, 50, 30, 55, 80, 75, 55, 80, 55, 40, 55, 40, 47, 43,…

$ Day14 <dbl> 75, 45, 45, 50, 70, 70, 55, 70, 80, 70, 50, 40, 55, 55, 60, 60, 45, 43, 47, 55, 65, 45, 47, 35, 75, 85, 75, 55, 83, 45, 37, 50, 37, 47, 50,…

$ Day15 <dbl> 70, 50, 50, 65, 60, 65, 55, 75, 75, 75, 50, 45, 55, 55, 65, 55, 45, 55, 40, 57, 70, 45, 40, 40, 80, 80, 67, 75, 85, 50, 45, 55, 40, 45, 40,…

$ Day16 <dbl> 80, 55, 50, 65, 60, 55, 50, 95, 80, 70, 55, 45, 55, 50, 65, 75, 40, 50, 55, 50, 80, 40, 40, 47, 75, 73, 75, 80, 87, 55, 55, 45, 40, 50, 43,…

$ Day17 <dbl> 70, 55, 45, 65, 55, 60, 50, 85, 85, 70, 55, 40, 65, 60, 60, 70, 43, 55, 65, 47, 65, 40, 57, 45, 77, 70, 70, 80, 90, 45, 40, 45, 45, 55, 43,…

$ Day18 <dbl> 75, 50, 45, 55, 55, 55, 45, 80, 80, 60, 50, 40, 60, 50, 55, 70, 40, 50, 55, 45, 65, 43, 57, 45, 80, 70, 70, 70, 90, 55, 45, 45, 45, 50, 45,…

$ Day19 <dbl> 70, 45, 45, 60, 65, 75, 40, 85, 80, 65, 45, 40, 65, 45, 55, 60, 47, 43, 45, 45, 65, 43, 55, 40, 75, 70, 63, 55, 80, 45, 45, 55, 40, 45, 45,…

$ Day20 <dbl> 65, 50, 40, 60, 70, 70, 55, 85, 75, 55, 45, 43, 55, 65, 65, 55, 55, 40, 40, 70, 65, 50, 50, 40, 75, 75, 60, 50, 85, 45, 40, 50, 40, 45, 55,…

$ `Day21 (Beginning of intervention)` <dbl> 85, 55, 65, 80, 70, 90, 65, 85, 85, 75, 55, 40, 60, 70, 65, 65, 60, 45, 45, 60, 70, 55, 55, 50, 80, 75, 65, 75, 80, 55, 40, 45, 45, 55, 50,…

$ Day22 <dbl> 85, 75, 65, 75, 75, 45, 50, 95, 60, 55, 50, 45, 65, 55, 55, 60, 55, 55, 40, 45, 85, 60, 50, 25, 75, 85, 60, 30, 90, 45, 40, 60, 50, 55, 50,…

$ Day23 <dbl> 90, 45, 70, 90, 65, 75, 70, 90, 80, 70, 85, 55, 70, 45, 80, 70, 45, 50, 55, 55, 75, 45, 40, 35, 75, 70, 80, 70, 90, 60, 45, 55, 55, 40, 65,…

$ Day24 <dbl> 85, 60, 55, 120, 80, 80, 75, 90, 85, 70, 70, 55, 70, 65, 75, 70, 65, 65, 55, 60, 80, 45, 45, 55, 65, 75, 70, 90, 85, 60, 45, 50, 40, 45, 60…

$ Day25 <dbl> 75, 50, 60, 105, 70, 100, 75, 95, 70, 75, 70, 75, 75, 60, 70, 85, 70, 45, 50, 55, 65, 55, 60, 40, 80, 70, 45, 70, 80, 45, 55, 45, 35, 55, 4…

$ Day26 <dbl> 75, 50, 70, 100, 70, 95, 65, 85, 60, 45, 75, 80, 55, 75, 70, 90, 65, 45, 65, 65, 60, 5, 60, 40, 65, 75, 60, 75, 70, 55, 55, 55, 45, 55, 55,…

$ Day27 <dbl> 65, 35, 55, 95, 55, 75, 60, 70, 70, 70, 80, 60, 75, 45, 75, 75, 35, 15, 60, 70, 75, 50, 55, 45, 60, 45, 35, 65, 85, 35, 50, 55, 20, 45, 40,…

$ Day28 <dbl> 75, 55, 40, 90, 60, 80, 75, 60, 70, 75, 55, 55, 65, 45, 80, 70, 45, 65, 65, 35, 80, 25, 45, 35, 80, 60, 50, 60, 90, 60, 45, 50, 50, 40, 55,…

$ Day29 <dbl> 75, 60, 65, 90, 80, 75, 65, 75, 85, 70, 60, 65, 80, 70, 55, 85, 60, 60, 55, 60, 70, 35, 25, 25, 75, 70, 65, 70, 70, 45, 30, 65, 55, 55, 45,…

$ Day30 <dbl> 80, 55, 65, 80, 85, 80, 60, 70, 75, 60, 65, 70, 75, 85, 75, 70, 65, 65, 70, 60, 60, 55, 35, 40, 70, 75, 75, 85, 75, 50, 50, 45, 50, 50, 60,…

$ Day31 <dbl> 75, 65, 70, 85, 75, 60, 55, 65, 75, 65, 60, 55, 80, 65, 60, 85, 55, 25, 80, 70, 75, 65, 45, 45, 75, 85, 70, 65, 70, 50, 40, 45, 45, 55, 55,…

$ Day32 <dbl> 50, 45, 75, 125, 75, 65, 40, 85, 75, 55, 60, 65, 65, 50, 75, 75, 50, 40, 55, 70, 85, 45, 25, 60, 65, 70, 85, 55, 65, 55, 35, 40, 55, 45, 55…

$ Day33 <dbl> 70, 40, 55, 120, 95, 70, 55, 80, 80, 50, 65, 70, 75, 55, 70, 65, 40, 50, 75, 60, 85, 70, 40, 55, 70, 65, 50, 65, 55, 35, 40, 45, 55, 45, 40…

$ Day34 <dbl> 75, 55, 50, 110, 70, 60, 60, 80, 85, 55, 55, 75, 75, 60, 55, 70, 75, 55, 75, 75, 70, 70, 55, 35, 75, 75, 70, 60, 75, 50, 55, 45, 45, 50, 45…

$ Day35 <dbl> 65, 55, 65, 90, 85, 85, 55, 95, 80, 45, 75, 55, 70, 40, 70, 70, 70, 55, 70, 75, 75, 75, 50, 30, 55, 70, 75, 60, 70, 30, 50, 50, 35, 55, 55,…

$ Day36 <dbl> 75, 60, 45, 95, 90, 75, 60, 90, 85, 70, 65, 80, 90, 65, 65, 75, 45, 65, 75, 65, 75, 55, 45, 55, 55, 75, 55, 70, 65, 55, 45, 45, 45, 50, 45,…

$ Day37 <dbl> 70, 50, 50, 105, 45, 65, 75, 75, 70, 35, 65, 85, 65, 70, 80, 90, 55, 55, 60, 80, 55, 60, 40, 40, 60, 65, 90, 65, 80, 65, 40, 45, 50, 40, 65…

$ Day38 <dbl> 75, 45, 55, 110, 75, 75, 70, 70, 80, 65, 70, 80, 60, 60, 65, 85, 50, 60, 85, 70, 75, 60, 30, 35, 65, 60, 80, 60, 70, 40, 55, 40, 70, 45, 55…

$ Day39 <dbl> 90, 65, 65, 115, 80, 80, 55, 65, 90, 60, 80, 70, 75, 55, 90, 80, 70, 65, 55, 75, 90, 55, 55, 40, 70, 65, 65, 75, 90, 60, 60, 45, 55, 55, 50…

$ Day40 <dbl> 85, 70, 65, 105, 75, 85, 75, 70, 95, 65, 65, 75, 70, 60, 85, 80, 60, 75, 80, 90, 80, 45, 40, 45, 60, 80, 75, 55, 65, 55, 55, 60, 50, 60, 55…Exercise: download the data by pasting the link into a web browser.

Print out the header on the command line or open the .csv file in Excel or

otherwise. Verify that the col_names and col_types arguments I provided

are correct.

The data is in “wide” format– each day has its own column. We want one column for subject ID, one for Day, and one for measurement. Let’s reformat the data:

study1_long <- study1 %>%

pivot_longer(Day1:Day40,names_to = "day",values_to = "measurement")

glimpse(study1_long)Rows: 1,600

Columns: 4

$ Subject <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2…

$ `Group (1=experimental condition, 0 = control condition)` <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1…

$ day <chr> "Day1", "Day2", "Day3", "Day4", "Day5", "Day6", "Day7", "Day8", "Day9", "Day10", "Day11", "Day12", "Day13", "Day14", "Day15", "Day16", "Day…

$ measurement <dbl> 55, 45, 45, 40, 45, 45, 55, 60, 45, 67, 65, 70, 80, 75, 70, 80, 70, 75, 70, 65, 85, 85, 90, 85, 75, 75, 65, 75, 75, 80, 75, 50, 70, 75, 65,…We previously had \(40\) subjects each with \(40\) columns of measurements; now we have \(1,600\) rows, which looks good to me. Let’s further clean up the data: we need to

- Rename the colums so they are pleasant (yes, this is important!),

- Extract the last digit of each measurement and save it in a new column.

Let’s do this. We’ll use the substr function to choose the last digit of each

number. Type ?substr to learn about this function.

study1_clean <- study1_long %>%

rename(subject = Subject,

group = `Group (1=experimental condition, 0 = control condition)`) %>%

mutate(last_digit = as.numeric(substr(measurement,nchar(measurement),nchar(measurement))))

glimpse(study1_clean)Rows: 1,600

Columns: 5

$ subject <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,…

$ group <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…

$ day <chr> "Day1", "Day2", "Day3", "Day4", "Day5", "Day6", "Day7", "Day8", "Day9", "Day10", "Day11", "Day12", "Day13", "Day14", "Day15", "Day16", "Day17", "Day18", "Day19", "Day20", "Day21 (Beginn…

$ measurement <dbl> 55, 45, 45, 40, 45, 45, 55, 60, 45, 67, 65, 70, 80, 75, 70, 80, 70, 75, 70, 65, 85, 85, 90, 85, 75, 75, 65, 75, 75, 80, 75, 50, 70, 75, 65, 75, 70, 75, 90, 85, 60, 55, 55, 55, 50, 45, 4…

$ last_digit <dbl> 5, 5, 5, 0, 5, 5, 5, 0, 5, 7, 5, 0, 0, 5, 0, 0, 0, 5, 0, 5, 5, 5, 0, 5, 5, 5, 5, 5, 5, 0, 5, 0, 0, 5, 5, 5, 0, 5, 0, 5, 0, 5, 5, 5, 0, 5, 5, 0, 0, 0, 6, 0, 0, 5, 0, 5, 5, 0, 5, 0, 5, 5,…Looks clean to me!

10.2.2 Make the histogram

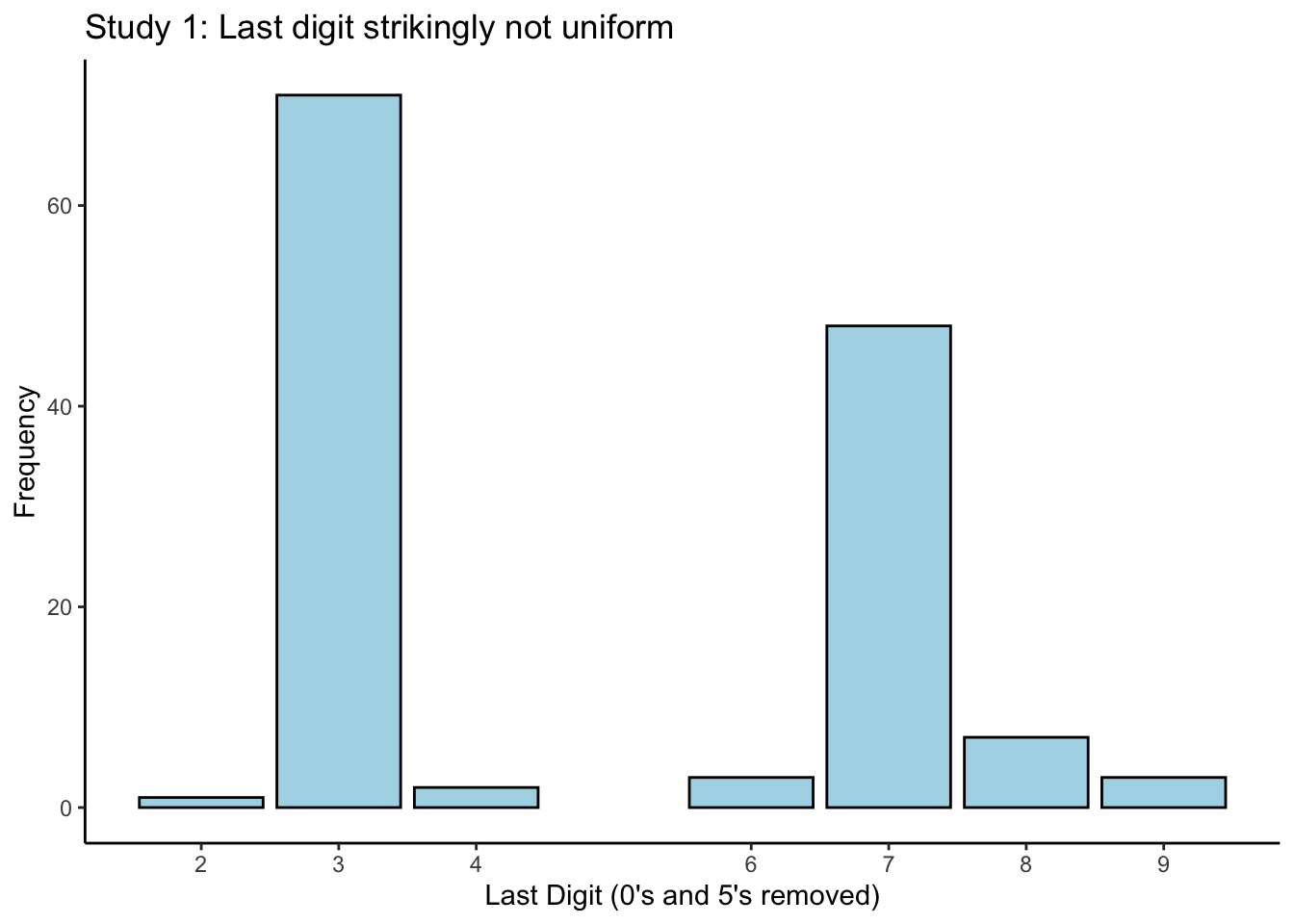

Okay, now consider Figure 1 in the blog post. They filter out observations ending in \(0\) or \(5\) (remember this later), because the authors of the disputed paper claim to have occasionally used a scale with \(5\)-gram precision, and occasionally one with \(1\)-gram precision (this alone is suspect…). They then make a histogram of all the last digits. We can do this too:

study1_clean %>%

filter(!(last_digit %in% c(0,5))) %>%

ggplot(aes(x = last_digit)) +

theme_classic() +

geom_bar(stat = "count",colour = "black",fill = "lightblue") +

scale_x_continuous(breaks = c(1,2,3,4,6,7,8,9),labels = c(1,2,3,4,6,7,8,9)) +

labs(title = "Study 1: Last digit strikingly not uniform",

x = "Last Digit (0's and 5's removed)",

y = "Frequency")

I called this plot a “histogram” (which it is), but I used geom_bar(stat = "count") to create

it. I did this because I wanted the bins to be at specific values. It’s still a histogram (why?).

10.2.3 Testing goodness of fit: simulation

If you expect the last digits of a set of numbers to be evenly-distributed across the values \(0 - 9\), then this plot might look surprising. But can we conclude that something is wrong, just by looking at a plot? What if the real underlying distribution of digits is actually evenly-distributed, and we just got a weird sample– we’d be making an incorrect harsh judgement.

We’re going to ask the question: if the underlying distribution of last digits really was evenly distributed, what is the probability of seeing the data that we saw, or a dataset that is even more extreme under this hypothesis?.

That’s a mouthful! But it’s a good question to ask. If it’s really, really unlikely that we see what we saw (or something even further) if the claim of even distribution is true, then this provides evidence against the notion that the digits are actually evenly distributed. And this provides evidence that there is something funny in the data.

We are going to do a simulation to investigate this claim. We are going to

- Generate a bunch of datasets the same size as ours but where the distribution of the last digit actually is evenly distributed across \(0 - 9\), and

- Record the proportion of digits in each that are \(3\) or \(7\), and

- Compute the proportion of our simulated datasets that have a proportion of 3’s or 7’s as high, or higher, as what we saw in our sample.

There are a few details that we can’t get exactly right here: the real data was generated by sampling a bunch of values that ended in \(0's\) or \(5's\) and then filtering these out, which is behaviour that I don’t know how to replicate exactly. We also could consider the distribution of \(3's\) and \(7's\) separately, or jointly (using “and” instead of “or”).

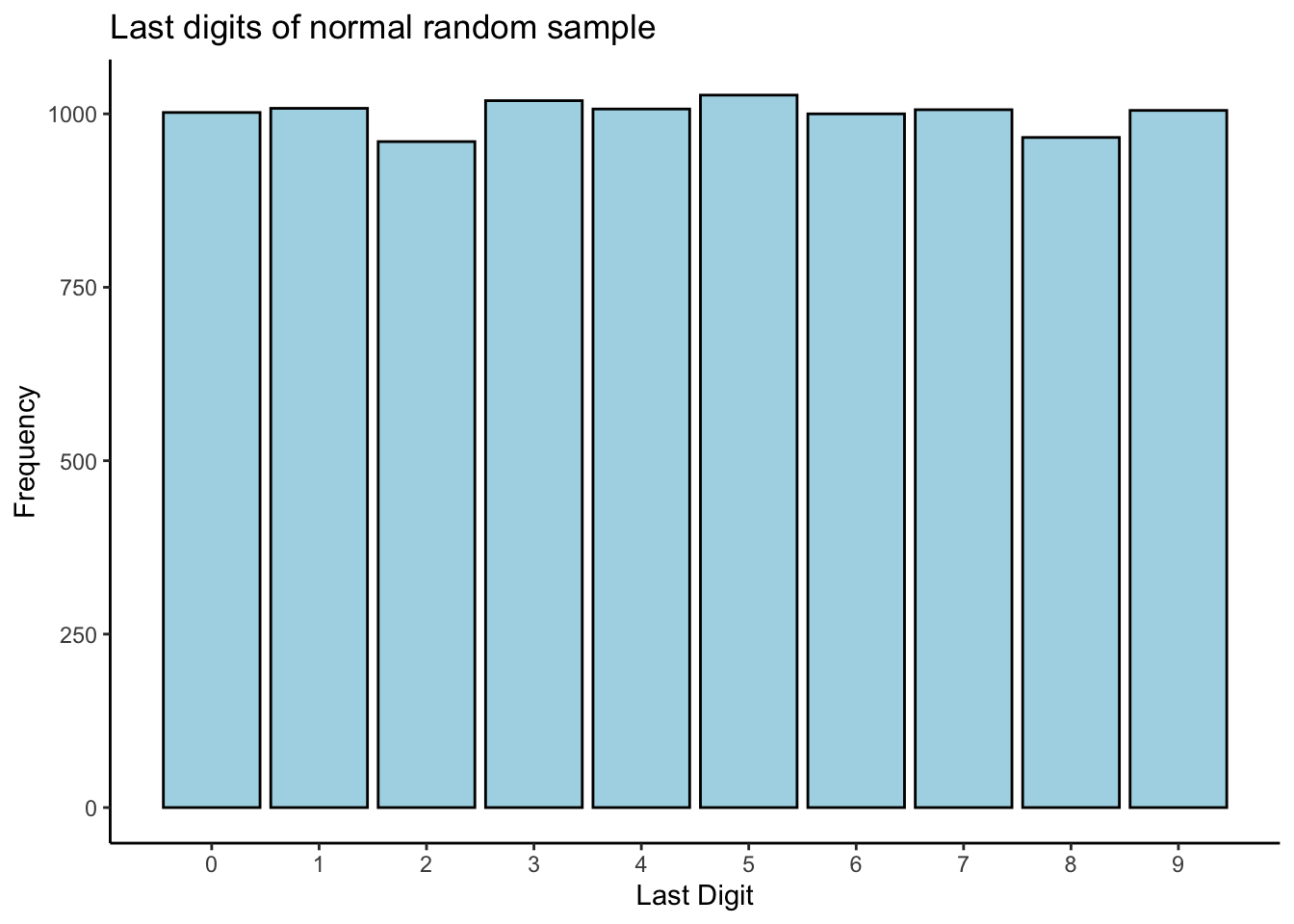

The first question is, how do we generate data that has last digits evenly distributed? Well, any random numbers should work, but to keep things consistent with the real data, let’s try generating from a normal distribution with mean and variance equal to the sample mean and variance of our data, rounded to the nearest integer:

mn <- mean(study1_clean$measurement)

ss <- sd(study1_clean$measurement)

testnumbers <- round(rnorm(10000,mn,ss))

# Plot a chart of the last digits

tibble(x = testnumbers) %>%

mutate(last_digit = as.numeric(substr(x,nchar(x),nchar(x)))) %>%

ggplot(aes(x = last_digit)) +

theme_classic() +

geom_bar(stat = "count",colour = "black",fill = "lightblue") +

scale_x_continuous(breaks = c(0,1,2,3,4,5,6,7,8,9),labels = c(0,1,2,3,4,5,6,7,8,9)) +

labs(title = "Last digits of normal random sample",

x = "Last Digit",

y = "Frequency")

Looks pretty uniform to me! Let’s proceed with our simulation:

set.seed(789685)

# Create a function that simulates a dataset

# and returns the proportion of last digits

# that are either 3 or 7

N <- nrow(study1_clean %>% filter(!(last_digit %in% c(5,0)))) # Size of dataset to simulate

B <- 1e04 # Number of simulations to do

mn <- mean(study1_clean$measurement)

ss <- sd(study1_clean$measurement)

simulate_proportion <- function() {

ds <- round(rnorm(N,mn,ss))

last_digits <- substr(ds,nchar(ds),nchar(ds))

mean(last_digits %in% c("3","7"))

}

# What is the proportion of 3's and 7's in our data,

# after filtering out 5's and 0's?

study_proportion <- study1_clean %>%

filter(!(last_digit %in% c(5,0))) %>%

summarize(p = mean(last_digit %in% c(3,7))) %>%

pull(p)

study_proportion[1] 0.88148# 88.1%. Wow.

# Perform the simulation:

sim_results <- numeric(B)

for (b in 1:B) sim_results[b] <- as.numeric(simulate_proportion() >= study_proportion)

# This is a vector of 0/1 which says whether each simulation's proportion of

# 3's and 7's exceeded the study proportion. Its mean is the simulated probability

# of seeing what we saw in the study, if the digits are actually evenly distributed:

mean(sim_results)[1] 0# Okay... how many?

sum(sim_results)[1] 0In \(B = 10,000\) simulations, I didn’t even get a single dataset that was as extreme as ours. This provides strong evidence against the notion that the digits are, in fact, evenly distributed.

Exercise: how many simulations do you need before you get even one that is as extreme as our dataset?

Exercise: investigate the manner in which we generated these random digits. Try the following:

Simulate from a continuous uniform distribution with mean and variance equal to the sample mean and variance (you have to figure out how to do this),

Simulate from a discrete uniform distribution with mean and variance equal to the sample mean and variance,

(Advanced) Simulate from a discrete uniform distribution as above, but then, chop off the last digit and replace it with one simulated from a discrete uniform distribution on \(\left\{0,\ldots,9\right\}\).

Exercise: repeate the simulation, but filter out numbers ending in \(0\) or \(5\) from the simulated datasets. Do the results change at all?

10.2.4 Testing goodness of fit: math

We may also use mathematics and statistical modelling to answer the question: if the underlying distribution of last digits really was evenly distributed, what is the probability of seeing the data that we saw, or a dataset that is even more extreme under this hypothesis?.

We do this in a clever way: we construct a statistical model that represents the truth, if the truth is what we say it is. Namely, we will define a probability distribution that should represent the distribution of the last digits of our measurements, if the last digits are evenly distributed. We then see how probable our data is under this model. If, under this model, it is very unlikely to see a dataset like ours, then this provides evidence that the model isn’t representative of the truth. And since the model was built under the premise that the digits are evenly distributed, a lack of fit of the model to the observed data provides evidence against the notion that the digits are evenly distributed.

Let’s develop a model. Define \(y_{ij} = 1\) if the last digit of the \(i^{th}\) measurement equals \(j\), for \(j \in J = \left\{1,2,3,4,6,7,8,9\right\}\) (remember: they filtered out measurements ending in \(0\) or \(5\)), and equals \(0\) otherwise. So for example, if the \(i^{th}\) measurement is \(42\) then \(y_{i1} = 0\) and \(y_{i2} = 1\) and \(y_{i3} = 0\) and so on. Define \(y_{i} = (y_{i1},\ldots,y_{i9})\), a vector containing all zeroes except for exactly one \(1\). Then the \(y_{i}\) are independent draws from a Multinomial distribution, \(y_{i}\overset{iid}{\sim}\text{Multinomial}(1,p_{1},\ldots,p_{9})\), with \(\mathbb{E}(y_{ij}) = \mathbb{P}(y_{ij} = 1) = p_{j}\) and \(\sum_{j\in J}p_{j} = 1\). The vectors \(y_{i}\) have the following (joint) density function: \[\begin{equation} \mathbb{P}(y_{i} = (y_{i1},\ldots,y_{i9})) = p_{1}^{y_{i1}}\times\cdots\times p_{9}^{y_{i9}} \end{equation}\] The multinomial is the generalization of the binomial/bernoulli to multiple possible outcomes on each trial. If the bernoulli is thought of as flipping a coin (two possible outcomes), then the multinomial should be thought of as rolling a die (six possible outcomes).

How does this help us answer the question? If the digits are actually evenly distributed, then this means \(p_{1} = \cdots = p_{9} = 1/8\) (why?).

However, the data might tell us something different. We estimate the \(p_{j}\) from out data \(y_{1},\ldots,y_{n}\) by computing the maximum likelihood estimator: \[\begin{equation} \hat{p}_{j} \ \frac{1}{n}\sum_{i=1}^{n}y_{ij} \end{equation}\] which are simply the sample proportions of digits that equal each value of \(j\).

We assess how close the MLEs are to what the true values ought to be using the likelihood ratio. For \(p_{0} = (1/8,\ldots,1/8)\), \(\hat{p} = (\hat{p}_{1},\ldots,\hat{p}_{9})\), and \(L(\cdot)\) the likelihood based off of the multinomial density, \[\begin{equation} \Lambda = \frac{L(p_{0})}{L(\hat{p})} \end{equation}\] Remember the definition of the likelihood, for discrete data: for any \(p\), \(L(p)\) is the relative frequency with which the observed data would be seen in repeated sampling, if the true parameter value were \(p\). The likelihood ratio \(\Lambda\) is the ratio of how often our data would be seen if \(p = p_{0}\), against how often it would be seen if \(p = \hat{p}\). If \(\Lambda = 0.5\), for example, that means that our data would occur half as often if \(p = p_{0}\), compared to if \(p = \hat{p}\). Note that \(0 < \Lambda \leq 1\) (why?).

Lower values of \(\Lambda\) mean that there is stronger evidence against the notion that \(p_{0}\) is a plausible value for \(p\)– that is, that the digits are evenly distributed. But how do we quantify how much less likely is less likely enough? Here is where our previous question comes back. We ask: if the digits were evenly distributed, what is the probability of seeing the data we saw, or something with an even more extreme distribution of digits? To compute this, we need to be able to compute probabilities involving \(\Lambda\).

It turns out that using \(\Lambda\) is very clever, because well, we can do this. There is a Big Theorem which states that if \(p\in\mathbb{R}^{d}\) and \(p = p_{0}\), \[\begin{equation} -2\log\Lambda \overset{\cdot}{\sim} \chi_{d-1}^{2} \end{equation}\] So, if the digits are evenly distributed, then our value of \(-2\log\Lambda\) should be a realization of a \(\chi^{2}_{7}\) random variable. We therefore compute \[\begin{equation} \nu = \mathbb{P}\left(\chi^{2}_{7} \geq -2\log\Lambda\right) \end{equation}\] The quantity \(\nu\) is the probability of observing a distribution of digits as or more extreme than the one we observed in our data, if the distribution of digits truly is even. It’s called a p-value, and it’s one helpful summary statistic in problems where the research question concerns comparing observations to some sort of reference, like we’re doing here.

Let’s compute the likelihood ratio for our data:

# Compute the MLEs

study1_filtered <- study1_clean %>%

filter(!(last_digit %in% c(5,0))) # Should have done this before...

pmledat <- study1_filtered %>%

group_by(last_digit) %>%

summarize(cnt = n(),

pp = n() / nrow(study1_filtered))`summarise()` ungrouping output (override with `.groups` argument)obsvec <- pmledat$cnt

names(obsvec) <- pmledat$last_digit

# Notice how there are no digits ending in 1 in the data

# So the MLE for p1 is zero.

# Need to account for this manually

pmle <- rep(0,8)

names(pmle) <- c(1,2,3,4,6,7,8,9)

pmlevals <- pmledat$pp

names(pmlevals) <- pmledat$last_digit

pmle[names(pmlevals)] <- pmlevals

pmle 1 2 3 4 6 7 8 9

0.0000000 0.0074074 0.5259259 0.0148148 0.0222222 0.3555556 0.0518519 0.0222222 # Truth, if digits evenly distributed

p0 <- rep(1,length(pmle)) / length(pmle)

names(p0) <- names(pmle)

p0 1 2 3 4 6 7 8 9

0.125 0.125 0.125 0.125 0.125 0.125 0.125 0.125 # Compute minus twice the likelihood ratio

multinom_log_likelihood <- function(x,p) {

# x: named vector where the name is j and the value is the count of

# times y_ij = 1 in the sample

# p: named vector with names equal to the unique values of x,

# containing probabilities of each

sum(x * log(p[names(x)]))

}

lr <- -2 * (multinom_log_likelihood(obsvec,p0) - multinom_log_likelihood(obsvec,pmle))

lr[1] 257.16# Compute the probability that a chisquare random variable is greater than this

# value:

1 - pchisq(lr,df = length(pmle) - 1) # zero.[1] 0Exercise: write your own function to implement the multinomial loglikelihood,

using the dmultinom function and a for loop. Compare your results to mine.

We would interpret this result as: the observed data provides strong evidence against the notion that the digits are evenly distributed.

The fact that there is essentially zero chance of seeing what we saw if our claim that the digits are evenly distributed agrees with our simulation. We just did some statistical forensics! Pretty cool. This type of reasoning is abundant in the scientific literature, as are these p-value things. They are useful, but we want you, as stats majors and specialists, to leave this course understanding than statistics is so very much more than just a ritual or toolbox!

library(tidyverse)